Objective :

Fetch URLs Youtube video from predefined text file and download each of them (yes, hence the name "downloadem"). Each download processes running at the same time and separately from each other.

Processes :

1. Read newline-delimited URLs file

2. Save URLs data into a list structure

3. Create separate download process for each one of them. Every process executing external Youtube downloader application (youtube-dl) 4. Waiting for exit signal (:DOWN) from each process and once received which means download process has been completed, finalize the process by writing this info into log file. So, one process doesn't have to wait other process that might be still in progress.

The Story :At first, out of my curiosity I just imagined to have some small application that has capability to download Youtube videos from predefined list. I thought the whole process would be like : read URLs from the list, download each one of them at the same time, and write those activities into flat log file. That's it!

I know that application like youtube-dl exists, so this one come in mind when I think about download engine. It's a very complex piece of software with immense combination of parameter we can use in order to be able to have some kind of download result.

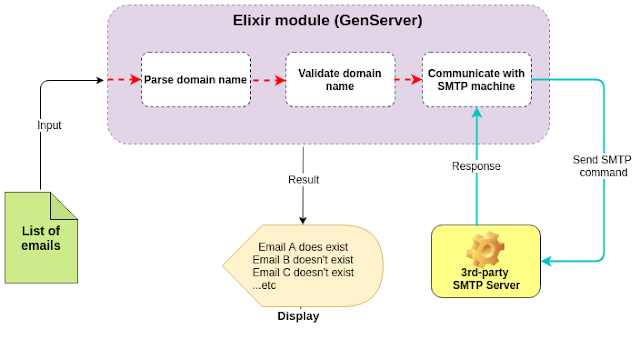

Next, I found about ElixirYoutubeDl, Elixir wrapper for youtube-dl. It also utilizing youtube-dl as download engine. But, after a brief look at the source code, I'm not quite sure this is what I want as I'm not familiar (yet) with GenServer approach. I can't see the "concurrent" part, the part that shows me it executes several download processes at the same time. Maybe I was wrong, due to my limited understanding and unfamiliarity with GenServer.

As suggested by Elixir documentation, to achieve my objective I chose to utilize Task.async followed by Task.await calling. So, my first version of script was :

do_concurrent_download(list_of_url) :

list_of_url

|> Enum.map(fn(url)->

Task.async(fn -> url |> run_downloader()

end)

|> Enum.map(&Task.await/1)

This version worked just fine. But, I just curious what happened behind the curtain every time run_downloader() function complete the download process and terminated? What kind of signal propagated following this termination process. I can't trap and track that because Task.await beautifully wrap this back and forth communication between process created by Task.async and its parent. So, I chose another approach. An approach that I can comprehend as a rookie.

Then, I found another amazing article about Task.async (Beyond Task.async) that take another angle how this module can be implemented. The detailed and step-by-step explanation it presented, inspired me to take an approach that became the final version of my Youtube downloader application. It was very straight forward and understandable, although I need to throw some message out through IO.puts here and there during debugging process.

So, in order to highlight inter-process communication, I commented out Task.await part and let the next function (trap_and_collect_exit_signal) deal with it. Here is the snippet of that function :

trap_and_collect_exit_signal(tasks) :

receive do

#when monitored process (a task) dies, a message is delivered to the monitoring process (parent) in the shape of

{:DOWN, ref, :process, object, reason}

eg:

{:DOWN, #Reference<0.906660723.3006791681.40191>, :process, #PID<0.118.0>, :noproc}

{:DOWN, monitor_reference, :process, task_pid, _reason} ->

...

Tools.write_result_log("Download END : [#{url}]")

IO.puts "Download END : [#{url}]"

...

#When task terminate, parent process received back its monitor_reference it triggered before when process created (by Task.async). Parent process implicitly call "Process.monitoring(task_PID)" so it can monitor the status of that task. Eg:

monitor_reference = #Reference<0.906660723.3006791681.40191>

other_msg ->

{monitor_reference, {youtube_dl_message, 0}} = other_msg

Tools.write_result_log("#{inspect(monitor_reference)}\n" <> youtube_dl_message)

...

end

When a process created by Task.async terminated (complete its job), the following sequence will be triggered:

1. Process will send monitor reference back to the parent process, along with some additional messages. 2. Right after monitor reference sent, the process will follow that by sending a DOWN signal to finalize the terminating process.

By receiving these 2 signals, parent process officially knows that one of its child already vanished. Then, it stays listening for other terminating signals that might come until the last signals received. All child process information (parent pid, pid, & monitor reference) is kept in %Task{} structure as an output of Task.async. So, once DOWN signal for certain process received, it will be matched with this structure and remove from the structure accordingly.

That's all. This kind of mechanism of terminating process was something I curious about at the beginning. Now, it's pretty clear how it works although maybe I still miss something here and there but that's part of learning process.

Thanks!

Lessons Learned :

1. Calling external command from inside Elixir using System.cmd.

2. How to create processes that run concurrently using Task.async.

3. The mechanic of terminating process, how a process send terminating signal to parent process that we can trap and do something about it.